BBC London Home Affairs Correspondent

BBC

BBCA person who is challenging a High Court against the Metropolitan police, was incorrectly identified after a live facial recognition technique because a suspect has described it as “banning and searching on steroids”.

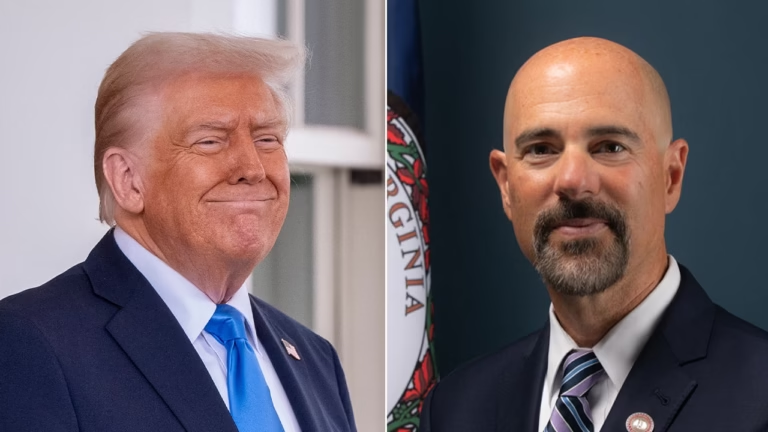

39 -year -old Sean Thompson was stopped by police outside the London Bridge Tube station in February last year.

The privacy campaign group Big Brother Watch said that the judicial review recited in January was the first legal case of its kind against “infiltration technology”.

The Mate, which announced last week, would double the deployment of its live facial recognition technology (LFR), said it is removing hundreds of dangerous criminals and is convinced that its use is valid.

The LFR maps a person’s unique facial features, and coincides against the faces on the watch-list.

Last month, Mate said that it had arrested more than 1,000 from January 2024 onwards, in which 773 had made an allegation or precaution, using technology including alleged pedophiles, rapists and violent robbers.

It was said that since January 2025, there were 457 arrests and seven false alerts.

But Mr. Thompson said that his experience of stopping was “scared” and “aggressive”.

“Every time I come before the London Bridge, I think of that moment.” Every time. ”

He explained how he was returning home with a change in Croidon in South London, with the community group street father, which aims to protect young people from knife crime.

As soon as he passes a white van, he said that the police approached him and told him that he was a wanted person.

“When I asked what I wanted, he said,” This is what we are here to find out. “

He said that the authorities asked him for his fingers, but he refused, and was allowed to leave after about 30 minutes after showing a picture of his passport.

Mr. Thompson says that he is bringing a legal challenge because he is concerned about the impact of LFR, especially if the youth are misunderstood.

“I want structural changes. This is not the way ahead. It is like living in a minority report,” he said, referring to the film where technology is used to predict crime.

“This is not a life that I know. It is a ban on steroid and search.

“I can only imagine what kind of damage it can do if it is making mistakes with me, someone who is working with the community.”

‘Infiltration’ or ‘Make London safe’?

Big Brother Watch, whose director Silky Carlo is bringing a legal challenge with Mr. Thompson, said this was the first time a case of wrong identity came before the High Court.

“This is actually a new power, absent from any democratic investigation,” said Senior Advocate Officer Medelin Stone. “There are no specific laws on the use of facial identification, they are actually writing their own rules of how they use it.

“Sean’s legal challenge is an important opportunity for the government and the police to review how this technology is actually spreading in London in unacceptable fashion.”

The Met Police spokesperson said that due to the ongoing action, Mr. Thompson was unable to provide full remarks on the case, but believed that the use of LFR was valid.

“We continue to connect with our communities about how this technology works, to understand that there are strict investigation and balance to protect people’s rights and privacy.”

In July, the Home Office stated that it would set its plans for future use of LFR in the coming months, including a legal structure and safety measures.

Met plans Doubles its use of LFR Till 10 times a week in five days, four times a week in two days.

Announcing last week, Commissioner Sir Mark Rowle told me that the technology is “making London safe”.

“There are many desired criminals. This helps us to score them round.”

He pointed to a case where a registered sexual criminal, who was banned from being alone with young children, Denmark was picked up on LFR cameras in HillSouth-east London, with a six-year-old girl in January.

“I think most people will expect two things. Is this right? Is it appropriate? We have really worked hard on it.”

Met has stated that it works within existing human rights and data protection laws and its approach has been tested by the National Physical Laboratory, to check that there is no gender or racial bias.

“If you don’t want, the image is removed directly,” the commissioner told me.

“Council CCTV You walk in the past, or shop for CCTV, they keep your face for 28 days. We keep your face for less than a second time. As long as you wanted, in that case, we arrest you.”

Mate has also announced a plan to use LFR on the August Bank Holiday on the view of this year’s Noting Hill Carnival, although not within the boundaries of the incident.

It says that this step will protect the carnival against the Carnival with the intention of “the small minority of individuals” and the wanted suspects in the walchalist will be involved, as well as the missing people “who may be at risk of criminal or sexual abuse”.

Since September, a pilot scheme is due to tests at Croidon in South London, Where fixed cameras will be installed on street furnitureInstead of being used by a team in a mobile van.

Force says that cameras will be switched to switch only when the authorities are using LFR in the area.

‘One algorithm and a camera’

Medaline Stone said, “The Met Police has ramped LFR in an extraordinary fashion over the years, which raise questions about racial discrimination and communities that they are targeting”, said Medaline Stone.

The force has stated that the use of technology is based on data LED and crime hotspots, and it comes with promoting neighborhood policing in those areas.

However, it states that saving due to “real -term funding deduction” and about 1,700 officers, PCSOs and employees are expected to be lost by the end of the year.

“They are replacing a police officer, who can know their community with an algorithm and a camera,” said Ms. Stone.

Mr. Thompson told me that he was concerned about the deployment of LFRs in communities, where policing already had low levels of beliefs and was contrary to this approach with the work being done by the road father.

“We talk to children, we make time for children, we put ourselves in hotspots.”

He said that the parents regularly called the group, asking them to remove the weapons discovered in their children’s bedrooms.

“We are doing a role where the community trusts us, not the police. We do not need machines for it. It is about human contact.”